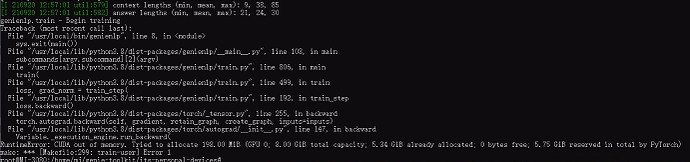

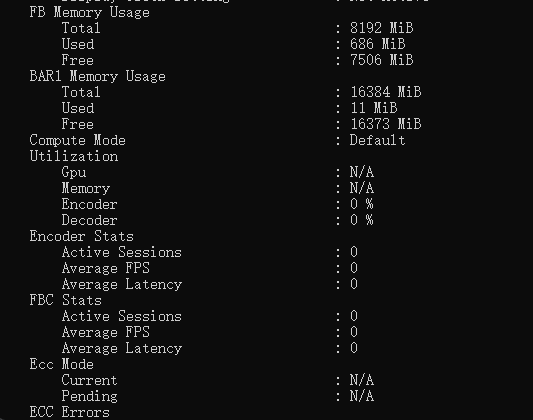

Yes, the laptop has a RTX 3080 GPU, so it should be quite powerful. I’m not sure what you meant with configuring CUDA for PyTorch, is that an Nvidia specific thing? If so, how do we configure it for PyTorch?

The laptop is using Windows and were doing the genie installation on an Ubuntu virtual machine that’s available from the Microsoft Store, so maybe that’s causing the slower training time?

Edit:

After doing some research, I found that CUDA and PyTorch are libraries that can be installed in certain NVidia GPUs. The laptop we are using seems to be capable of using CUDA, so do we need to install both of these libraries in the VM?

Edit 2:

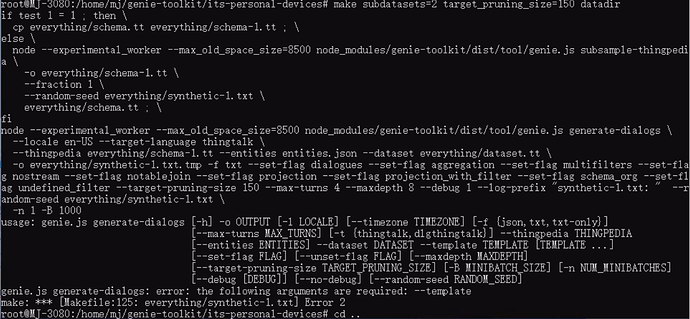

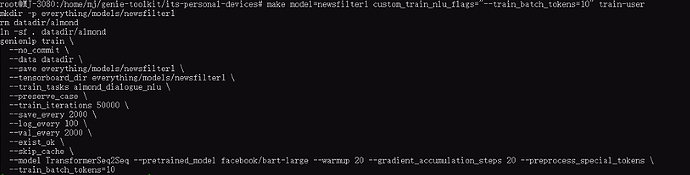

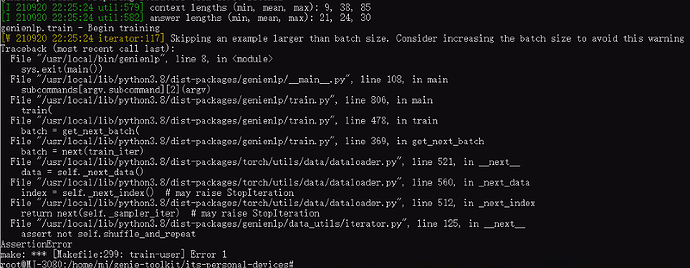

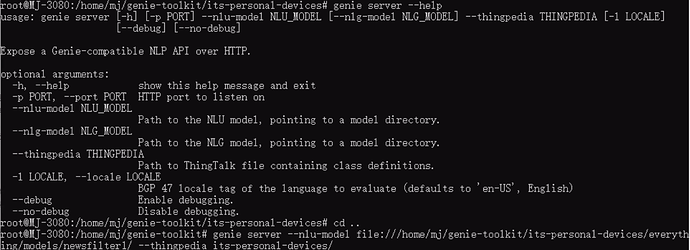

We managed to install CUDA 11.1 and PyTorch in the VM. However, since we reinstalled the entire VM, we also redid the entire local genie installation. For some reason, we are now getting this error when running the make subdatasets=2 target_pruning_size=150 datadir command:

I don’t think it has ever asked for a template before, did something change in the genie repository? We copied everything needed and redid the installation steps according to our previous recorded steps.